Using mobile robots for SLAM research

A core technique of robotics is navigating the dynamic and ever-changing world. Simultaneous localisation and mapping (SLAM) is crucial for systems to keep track of their location within an environment. SLAM algorithms are used in navigation, robotic mapping, and odometry for virtual and augmented reality.

A team from ShanghaiTech University in China are working with Jackal UGV from Clearpath Robotics to develop and collect open-source datasets for sensor data to support such SLAM research and share with other roboticists.

Creating a database for the SLAM community

The team’s advanced mapping robot, using Jackal UGV as a base, is leveraging this rich academic and research-focused environment for SLAM research; a computation they consider to be essential for most mobile robotic applications.

They recognised that the datasets, which store the sensor data collected by robots, are at the core of SLAM research since they allow repeatable and reproducible results which are needed to compare different SLAM approaches. While there are some popular datasets like the Intel Dataset or KITTI, those are limited by the data they can collect.

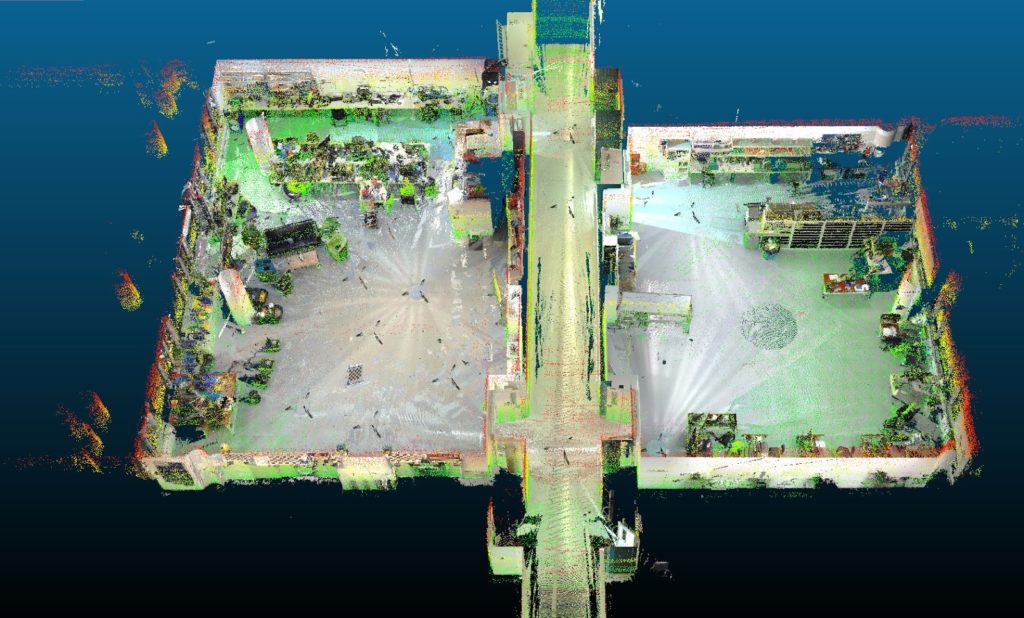

The aim of the Jackal UGV based project is to generate comprehensible and high-resolution datasets for the benefit of the SLAM community via an advanced mapping robot, featuring a sensor suite “previously unseen” in mobile robotics.

Highly customisable platform

Developing a reliable mapping robot and the software system for data recording presented the key challenges for the project. On top of the mobile base platform, the team mounted two 32 channel LiDAR sensors, nine high-resolution cameras, one IMU, and a computing system that utilises an Intel i7 to collect the data. The LiDAR sensors were powered via the battery, while the cameras were powered via USB.

The positional data (odometry) for the robot was collected from an Xsense MTi-300, the Jackal’s ROSodometry (from its onboard IMU and motor encoders), and from an Optitrack Tracking System (when in range).

To run the robot’s processes, the team used the Clearpath open-source software stack for Jackal UGV. To properly synchronise the hardware of all of the sensors they also developed a bespoke system. In the same vein, the team paid close attention to how they calibrated their many sensors for maximum accuracy. Finally, the robot had to prove real-world applicability by collecting three datasets and running 2D and 3D LiDAR, as well as visual SLAM algorithms using those datasets.

Hitting the ground running with ROS

The team’s real focus was testing and application of their algorithms. They didn’t want to spend their valuable time building a new mobile platform from the ground up and so opted to use the pre-built and out-of-the-box ready Jackal UGV. What made Jackal UGV stand out is its robustness and reliability, high speed, high mobility, and readily available documentation.

As the team lead, Sören Schwertfeger, commented: “It offers plenty of space inside the robot to install a fast computer and is just the right size for the sensor payload. The Jackal is very easy to use because of its nice ROS interface and it is very reliable, so we did not need to spend any efforts in this project on the mobile base or the power source. I chose Clearpath Robotics over its competitors because I cherish the reliability and ease of use of their products”.

Husky UGV and Jackal UGV tag-team

With their project seeing great success and publication of their three datasets, the team is already working on expanding its scope. Currently, they are testing a significantly upgraded version of the mapping robot which will use four 3D LiDAR sensors with a total of 576 beams, as well as four solid-state LiDARs.

Next to an omnidirectional camera they also plan to mount 10 cameras with 5MP at 60Hz, stereo infrared and stereo event cameras as well as two differential GPS receivers. To reliably collect all this data, a mobile robot would need to use a cluster of 12 computers! This sensor suite, however, will require a bigger mobile base. Luckily, Clearpath has a range of mobile platforms for all types of payload and terrain, so the team plans to switch over to Husky UGV for this application.