A Comprehensive Guide: Choosing the Right Sensors for Automated and Autonomous Platforms

Introduction:

Are you ready to embark on the exciting journey of building an automated or autonomous platform? In the rapidly evolving field of autonomy, selecting the appropriate sensors is crucial for the safe and efficient operation of automated + autonomous platforms. One of the most crucial decisions you’ll face is selecting the right sensors that will serve as the eyes and ears of your platform.

This blog series aims to provide a comprehensive guide to help engineers navigate the process of choosing the right sensors for their autonomous platforms. By understanding the key considerations and exploring various sensor options, you can make informed decisions that will contribute to the success of your autonomous platform’s perception system.

We will start by looking at the sensors generally associated with automated or autonomous platforms, offering an introduction to the different types of sensors and in the following weeks we will look at understanding your requirements for your project and later take a deep dive into each of the sensors. Later we will look at topics such as Sensor Calibration and Sensor Fusion.

Building autonomous platforms requires a keen understanding of the sensors that enable them to perceive and navigate the world around them. In this blog post, we will explore some key sensor technologies and their roles in the perception systems of autonomous platforms. From Lidar’s precision in perception to radar’s reliability in adverse conditions, cameras’ visual perception capabilities, and ultrasonic sensors’ close-range awareness, each sensor brings unique advantages to the table. By comprehending their characteristics and applications, engineers can make informed decisions when selecting sensors for their autonomous platforms.

Lidar: Precision in Perception:

Lidar (Light Detection and Ranging) technology has revolutionized the field of perception for autonomous platforms. By employing laser beams, Lidar sensors enable precise measurement of distances and the creation of detailed 3D maps of the surrounding environment. The key advantage of Lidar lies in its ability to provide highly accurate and high-resolution data, making it an invaluable tool for object detection, mapping, and localization.

When Lidar sensors emit laser pulses, they measure the time it takes for the light to reflect back to the sensor. By calculating these precise time intervals, Lidar sensors can accurately determine the distances to objects within their field of view. This capability allows for the creation of point cloud data, which represents the spatial distribution of objects in the environment.

The resulting point cloud data can then be processed using advanced algorithms and techniques to identify objects and their positions. This process, known as point cloud segmentation, involves grouping the data points into distinct objects based on their spatial relationships and characteristics. By analysing the point cloud, autonomous platforms can discern obstacles, road features, and other important elements in their surroundings.

Lidar technology finds extensive use in various autonomous platforms, including unmanned aerial vehicles (UAVs), unmanned ground vehicles (UGVs), and autonomous robots. In UAVs, Lidar enables accurate terrain mapping and obstacle avoidance during flight, crucial for safe and efficient navigation. UGVs equipped with Lidar sensors can detect and classify objects in their path, enabling autonomous operation in complex environments. Similarly, Lidar plays a vital role in the perception systems of autonomous robots, allowing them to navigate and interact with their surroundings with precision and efficiency.

The benefits of Lidar extend beyond its use in autonomous platforms. It has found applications in fields such as environmental monitoring, surveying, and even in the entertainment industry for creating realistic virtual environments. Its ability to generate highly detailed 3D maps makes Lidar an indispensable tool in various industries and research domains.

As technology continues to advance, Lidar systems are becoming more compact, affordable, and capable. Newer generations of Lidar sensors offer improved range, resolution, and reliability, allowing for enhanced perception capabilities in autonomous platforms. Furthermore, ongoing research and development efforts are focused on optimizing Lidar systems for specific use cases, such as urban environments, where dense and dynamic scenes require robust perception solutions.

In conclusion, Lidar technology plays a pivotal role in the precision and accuracy of perception systems for autonomous platforms. Its ability to measure distances and generate detailed 3D maps provides valuable information for object detection, mapping, and localization. By leveraging Lidar’s capabilities, unmanned aerial vehicles, unmanned ground vehicles, and autonomous robots can navigate and interact with their surroundings with confidence and efficiency. As Lidar technology continues to evolve, we can expect even more sophisticated and capable systems that will drive the advancement of autonomy across diverse industries.

Radar: Sensing in Adverse Conditions:

In the realm of autonomous platforms, Radar sensors play a critical role in providing robust perception capabilities, especially in challenging weather conditions and adverse environments. Utilizing radio waves, Radar sensors detect objects and gather essential information about their distance, speed, and angle. Their ability to excel in adverse weather conditions, such as rain, fog, or dust, makes them a reliable solution for long-range detection in various applications.

The functioning principle of Radar involves the emission of radio waves from the sensor, which propagates through the environment. When these waves encounter objects in their path, they reflect back to the Radar sensor. By analysing the characteristics of these reflections, including their time of arrival and strength, Radar systems can determine the presence, location, and properties of objects in the surroundings.

One of the notable advantages of Radar is its ability to operate effectively in adverse weather conditions. Unlike other sensing technologies that may be affected by factors like rain or fog, Radar’s radio waves are capable of penetrating through such environmental disturbances. This characteristic enables Radar sensors to provide reliable detection capabilities even when visibility is significantly reduced.

Radar sensors are particularly useful in complementing other sensing technologies like Lidar. While Lidar excels in providing detailed 3D maps and precise object detection, Radar adds an extra layer of redundancy and safety to the perception system. By offering a different sensing modality, Radar can mitigate limitations that may arise in certain scenarios, such as when objects have low reflectivity or when environmental conditions hinder optimal Lidar performance.

One of the key advantages of Radar sensors is their ability to detect large objects accurately. Buildings, infrastructure, and other vehicles are readily detected by Radar due to their significant size and reflective properties. By providing information about the distance, speed, and angle of these objects, Radar enables collision avoidance and enhances overall situational awareness in autonomous platforms.

Moreover, Radar sensors offer valuable velocity information, which is crucial for assessing the dynamic behaviour of objects in the environment. By measuring the Doppler shift in the reflected radio waves, Radar can determine the relative velocity of objects, including their direction and speed. This velocity information plays a vital role in collision prediction, trajectory planning, and overall navigation safety for autonomous platforms.

The integration of Radar sensors within autonomous platforms brings a range of benefits. Their ability to operate in adverse weather conditions ensures that perception systems remain reliable and effective, even in challenging environments. The detection of large objects and the provision of velocity information contribute to collision avoidance and situational awareness, critical components for safe and efficient autonomous operations.

As technology advances, Radar systems continue to evolve, with improved range, resolution, and reliability. Modern Radar sensors can detect and tracking objects at extended distances, providing a comprehensive view of the environment. Ongoing research and development efforts focus on enhancing Radar performance, reducing size and cost, and exploring advanced signal processing techniques to further enhance perception capabilities.

In conclusion, Radar sensors are a vital component of perception systems for autonomous platforms. With their ability to utilize radio waves to detect objects and measure their distance, speed, and angle, Radar sensors excel in adverse weather conditions, providing reliable long-range detection. By complementing other sensing technologies and offering redundancy, Radar enhances overall safety and collision avoidance capabilities. As autonomous technology progresses, Radar systems will continue to play a significant role in enabling autonomy in various industries, contributing to the advancement of safer and more efficient autonomous platforms.

Cameras: Visual Perception:

In the realm of autonomous platforms, cameras stand as an indispensable sensory tool, playing a vital role in visual perception. With the ability to capture real-time images and videos, cameras enable a wide range of essential functionalities, including object recognition, scene understanding, and navigation. The advancements in computer vision and artificial intelligence algorithms have revolutionized camera-based perception systems, significantly enhancing their capabilities and expanding their application in autonomous platforms.

One of the key strengths of cameras is their capacity to provide rich visual information. By capturing high-resolution images and videos, cameras offer a comprehensive view of the environment surrounding the autonomous platform. This visual data serves as a valuable resource that can be processed and analysed to extract meaningful insights about the surroundings. Through sophisticated computer vision algorithms, cameras can identify objects, track their movements, and estimate their poses, enabling a robust perception system for autonomous platforms.

The integration of advanced artificial intelligence algorithms has propelled camera-based perception systems to new heights. Machine learning techniques, such as deep neural networks, have demonstrated remarkable success in object recognition tasks. By training on vast datasets, these algorithms can learn to detect and classify a wide range of objects with high accuracy. Cameras, with their ability to capture detailed visual information, provide the input necessary for training and deploying these powerful algorithms in autonomous platforms.

Camera sensors have the unique capability of recognizing and interpreting visual cues within the environment. Road signs, traffic lights, lane markings, and other visual indicators are crucial elements for safe and efficient navigation. Cameras, with their high-resolution imagery and sophisticated image processing techniques, can extract these visual cues from the environment and interpret their meaning. This enables autonomous platforms to understand traffic rules, recognize potential hazards, and make informed decisions based on the visual information captured by the cameras.

The integration of camera sensors into autonomous platforms significantly enhances their situational awareness. By providing a visual perspective of the surroundings, cameras enable a more comprehensive understanding of the environment. This situational awareness is essential for tasks such as path planning, obstacle avoidance, and dynamic object tracking. Cameras, with their ability to capture real-time visual data, enable autonomous platforms to adapt and respond to changing conditions in real time, ensuring safe and efficient operations.

Furthermore, the continuous advancements in camera technology have led to the development of specialized camera sensors for specific autonomous platform applications. For example, stereo cameras, equipped with multiple lenses, allow for depth perception and 3D reconstruction of the environment. This depth information can be utilized for precise object localization and obstacle avoidance. Thermal cameras, on the other hand, enable the detection of heat signatures, which is useful for identifying living organisms, such as humans or animals, in certain scenarios.

As technology progresses, cameras are poised to play an even more critical role in the field of autonomous platforms. The ongoing advancements in image sensor technology, including higher resolutions, wider dynamic ranges, and improved low-light performance, contribute to the overall effectiveness of camera-based perception systems. Additionally, the integration of multi-camera setups and the fusion of camera data with data from other sensors further enhance the perception capabilities of autonomous platforms, providing a more comprehensive and reliable understanding of the environment.

In conclusion, cameras are integral to the visual perception of autonomous platforms. With their ability to capture real-time images and videos, cameras enable object recognition, scene understanding, and navigation. The advancements in computer vision and artificial intelligence algorithms have revolutionized camera-based perception systems, enabling them to extract valuable information from visual data. By integrating cameras into autonomous platforms, situational awareness is enhanced, enabling safer and more efficient operations. As camera technology continues to evolve, the capabilities of camera-based perception systems will continue to expand, driving the progress of autonomy in various industries.

Ultrasonic Sensors: Close-Range Awareness:

When it comes to close-range awareness, ultrasonic sensors take the spotlight in the realm of autonomous platforms. By utilizing sound waves, these sensors offer a reliable means of detecting objects in proximity, making them an invaluable asset for obstacle detection, collision avoidance, and low-speed manoeuvring. With their ability to emit high-frequency sound waves and measure the time it takes for the waves to bounce back after hitting an object, ultrasonic sensors provide crucial information about the immediate surroundings, enhancing the safety and precision of autonomous platforms.

Ultrasonic sensors operate on a straightforward principle: they emit a burst of high-frequency sound waves and then measure the time it takes for the waves to reflect and return to the sensor. By analysing the time of flight, the sensors can determine the distance between themselves and nearby objects. This distance information is vital for detecting obstacles within a short range, enabling autonomous platforms to navigate and manoeuvre in confined spaces with precision and confidence.

The short-range detection capability of ultrasonic sensors makes them particularly useful for low-speed navigation scenarios. When autonomous platforms operate in environments that require cautious movements, such as parking lots, indoor spaces, or crowded areas, ultrasonic sensors provide essential close-range awareness. By continuously scanning the immediate surroundings, these sensors detect obstacles that might be out of the field of view of other sensors, ensuring that the platform remains safe and avoids collisions.

Docking is another application where ultrasonic sensors shine. When autonomous platforms need to align themselves with docking stations or other objects for charging, loading, or other purposes, ultrasonic sensors play a pivotal role in achieving precise and reliable positioning. By detecting the distance between the platform and the docking station, these sensors assist in guiding the platform for successful and efficient docking manoeuvres.

The accuracy and reliability of ultrasonic sensors, combined with their fast response times, contribute to the overall safety of autonomous platforms. By providing real-time information about the proximity of objects, these sensors enable platforms to take immediate action to avoid collisions or adjust their trajectories. The close-range awareness provided by ultrasonic sensors acts as an additional layer of protection, complementing other sensors such as lidar, radar, and cameras, and enhancing the platform’s ability to navigate complex environments safely.

Moreover, the simplicity and cost-effectiveness of ultrasonic sensors make them a popular choice for integration into autonomous platforms. Their robustness in harsh environments, resistance to dust, moisture, and temperature variations, make them suitable for a wide range of applications. Whether it’s indoor navigation, warehouse robotics, or small-scale autonomous vehicles, ultrasonic sensors provide a practical and reliable solution for close-range awareness.

As technology continues to advance, ultrasonic sensors are also evolving to meet the increasing demands of autonomy. Modern ultrasonic sensors offer improved performance, higher precision, and extended detection ranges, expanding their capabilities and applicability in various scenarios. Additionally, the integration of multiple ultrasonic sensors in different orientations allows for a more comprehensive understanding of the surrounding environment, enhancing the platform’s ability to detect and avoid obstacles from multiple angles.

In conclusion, ultrasonic sensors are essential for close-range awareness in autonomous platforms. By utilizing sound waves and measuring their reflection times, these sensors enable obstacle detection, collision avoidance, and precise manoeuvring in low-speed scenarios. Their short-range detection capability and fast response times make them indispensable in navigating confined spaces and crowded environments. Ultrasonic sensors contribute to the overall safety of autonomous platforms by providing vital close-range awareness and complementing other sensing technologies. With ongoing advancements, ultrasonic sensors will continue to play a vital role in enhancing the capabilities of autonomous platforms and driving the progress of autonomy in various industries.

GPS: Global Positioning:

When it comes to autonomous platforms, GPS (Global Positioning System) takes centre stage in providing accurate positioning and navigation information. As a global satellite-based navigation system, GPS plays a vital role in enabling autonomous platforms to determine their precise location on the Earth’s surface and navigate effectively. By receiving signals from multiple satellites, GPS receivers leverage the time delay to calculate the platform’s exact coordinates, enhancing the overall autonomy, efficiency, and reliability of these platforms.

The fundamental principle behind GPS is trilateration. GPS receivers capture signals transmitted by multiple satellites orbiting the Earth. Each satellite broadcasts a unique signal containing information about its precise position and the time of transmission. By measuring the time delay between the transmission and reception of signals from different satellites, GPS receivers can determine the distance between the satellites and themselves. With this information, along with the known positions of the satellites, the receivers can precisely calculate their own position on the Earth’s surface.

The accurate positioning provided by GPS is invaluable for autonomous platforms. By knowing their exact location, these platforms can navigate predefined routes, follow waypoints, and accurately plan their movements. GPS integration enables platforms to operate seamlessly across different geographical locations, whether it’s a self-driving car travelling through city streets or an unmanned aerial vehicle (UAV) surveying a vast area of land. The precise positioning information from GPS enhances the platform’s situational awareness and enables it to make informed decisions based on its real-time location.

Autonomous platforms heavily rely on GPS to achieve their intended tasks. For example, in the context of self-driving cars, GPS assists in determining the vehicle’s position on the road network and aids in accurate navigation. By combining GPS data with other sensing technologies like lidar, radar, and cameras, autonomous vehicles can accurately perceive their surroundings, identify road features, and plan optimal routes. GPS acts as a key component in the sensor fusion process, providing a global reference for the platform’s local perception and decision-making.

In addition to positioning, GPS also provides accurate timing information. Precise time synchronization is crucial for many autonomous systems, as it enables coordination between different components and facilitates data fusion. By having access to synchronized time information through GPS, autonomous platforms can ensure accurate data collection, sensor fusion, and synchronization between different subsystems. This synchronization enhances the overall efficiency and performance of the platform, enabling seamless coordination and communication between various components.

It’s worth noting that while GPS is highly accurate and widely used, it does have limitations. In certain scenarios, such as urban environments with tall buildings or areas with dense foliage, GPS signals can be obstructed or weakened, resulting in degraded accuracy. To mitigate these limitations, autonomous platforms often rely on complementary technologies such as inertial measurement units (IMUs) and other localization techniques to maintain accurate positioning and navigation in challenging environments.

As GPS technology continues to evolve, advancements such as improved signal processing algorithms and the deployment of additional satellite constellations further enhance its capabilities. For instance, the development of newer satellite systems like GLONASS, Galileo, and BeiDou provides increased availability and improved accuracy, enabling more reliable positioning and navigation for autonomous platforms across the globe.

In conclusion, GPS plays a pivotal role in autonomous platforms by providing accurate positioning and navigation information. By leveraging signals from multiple satellites, GPS receivers calculate the platform’s precise location and enable seamless navigation across different geographical locations. GPS integration enhances the overall autonomy and reliability of platforms, allowing them to operate efficiently and make informed decisions based on their real-time position. Despite its limitations, GPS technology continues to advance, expanding its capabilities and ensuring the continuous progress of autonomy in various industries.

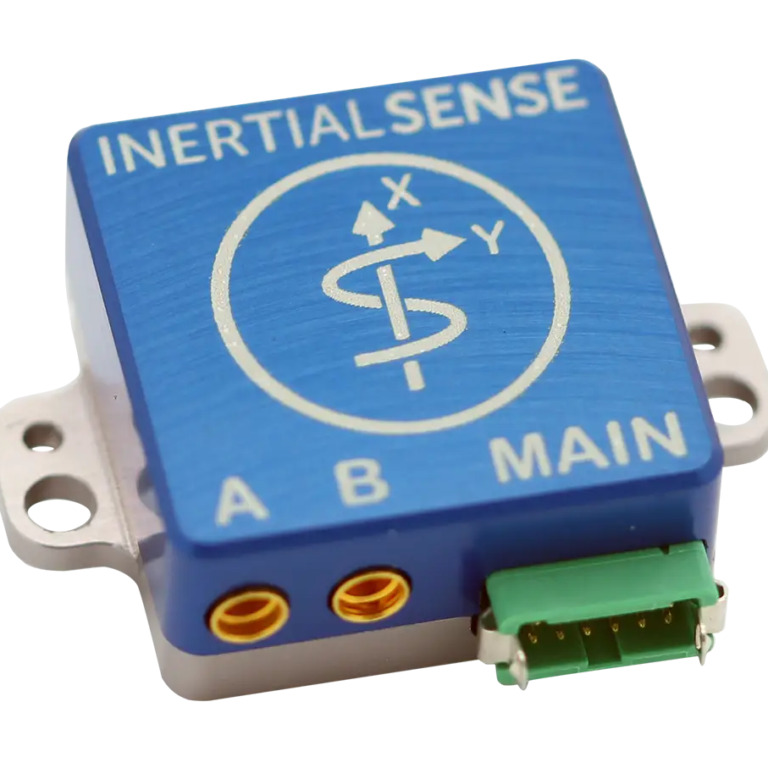

IMU (Inertial Measurement Unit): Navigating the World with Precision:

In the realm of autonomous platforms, IMU (Inertial Measurement Unit) sensors play a crucial role in providing precise motion tracking and orientation information. These sensors enable platforms to understand their movements, maintain stability, and navigate through dynamic environments. IMUs combine several sensing technologies, including accelerometers, gyroscopes, and magnetometers, to provide a comprehensive picture of the platform’s motion in three-dimensional space.

Accelerometers within the IMU measure linear acceleration along different axes. By detecting changes in velocity, these sensors enable platforms to gauge their speed, acceleration, and deceleration accurately. This information is essential for various applications, such as autonomous vehicles adjusting their speed based on traffic conditions or unmanned aerial vehicles (UAVs) stabilizing themselves during flight manoeuvres. IMU accelerometers offer real-time feedback on the platform’s linear motion, contributing to precise control and navigation.

Gyroscopes, another key component of IMU sensors, measure angular velocity or rotational motion. They enable platforms to understand their orientation and rate of rotation around different axes. Gyroscopes are instrumental in stabilizing platforms, compensating for external forces, and maintaining a desired orientation. In autonomous platforms like drones, gyroscopes help maintain stability and smooth flight paths by detecting and compensating for any rotational deviations. By combining accelerometer and gyroscope data, IMUs provide a complete picture of the platform’s motion, ensuring accurate navigation and control.

Magnetometers, the third component of an IMU, measure the strength and direction of magnetic fields. By sensing the Earth’s magnetic field, magnetometers allow platforms to determine their heading or compass direction. This information is particularly valuable in applications where absolute orientation is essential, such as autonomous navigation systems that require accurate heading information for path planning and localization. Magnetometers in IMUs contribute to precise orientation estimation, enhancing the platform’s ability to navigate and interact with its surroundings.

IMU sensors provide continuous, real-time data on the platform’s motion, making them particularly valuable for dynamic environments. Unlike external sensors like GPS that may suffer from signal loss or interference, IMUs offer autonomous platforms an internal navigation system that operates independently. This self-contained capability allows platforms to navigate accurately even in areas with limited or no GPS coverage, such as indoor environments or tunnels.

The information provided by IMUs is crucial for numerous autonomy-related tasks. For example, in autonomous vehicles, IMUs assist in monitoring the vehicle’s roll, pitch, and yaw movements, contributing to stability control, path planning, and vehicle dynamics. In robotics applications, IMUs enable precise manipulation of robotic arms, helping maintain stability during intricate tasks. IMUs also find utility in virtual reality (VR) and augmented reality (AR) systems, providing users with accurate motion tracking and a seamless immersive experience.

To ensure optimal performance, IMUs often employ sensor fusion techniques, combining data from multiple sensors within the unit. By fusing accelerometer, gyroscope, and magnetometer data, IMUs provide a more robust and accurate estimation of the platform’s motion and orientation. Sensor fusion algorithms, such as Kalman filters, enable IMUs to mitigate individual sensor limitations and provide reliable information for autonomous platforms.

IMU technology continues to advance, with ongoing research and development focusing on improving sensor accuracy, reducing noise, and enhancing overall performance. Miniaturization efforts have resulted in smaller and more power efficient IMUs, making them suitable for a wide range of applications, including wearables, robotics, and Internet of Things (IoT) devices. Additionally, advancements in sensor calibration techniques and data fusion algorithms continue to enhance the accuracy and reliability of IMU-based navigation systems. In conclusion, IMU sensors are indispensable for autonomous platforms, providing precise motion tracking and orientation information. By combining accelerometers, gyroscopes, and magnetometers, IMUs offer a comprehensive view of the platform’s movement in three-dimensional space. With their ability to provide real-time data, IMUs enable platforms to navigate dynamic environments accurately and maintain stability. As IMU technology advances, we can expect even more precise motion tracking, improved sensor fusion techniques, and widespread integration into various autonomy-driven applications across industries

Conclusion:

Choosing the right sensors for autonomous platforms is a critical decision that significantly impacts their performance, safety, and efficiency. By understanding your specific requirements and considering factors such as environment, autonomy level, range, and object detection needs, you can lay a strong foundation for selecting the most suitable sensors.

In part of our comprehensive guide, we introduced five key sensor types commonly used in autonomous platforms: Lidar, Radar, Cameras, Ultrasonic Sensors, and GPS with IMU. Lidar technology offers precision in perception with its ability to generate detailed 3D maps and provide accurate object detection and localization. Radar sensors excel in adverse weather conditions and provide additional redundancy for enhanced safety. Cameras, powered by advancements in computer vision and AI algorithms, play a vital role in visual perception, enabling object recognition and scene understanding. Ultrasonic sensors offer close-range awareness and are particularly useful for low-speed navigation and obstacle detection. GPS with IMU provides accurate positioning and navigation information, allowing platforms to determine their location and plan their movements across different geographical locations.

By combining these sensor technologies and leveraging their respective strengths, autonomous platforms can achieve a higher level of situational awareness, enabling them to navigate and interact with the surrounding environment effectively. However, it’s important to note that sensor selection is not a one-size-fits-all approach. Depending on the specific application and platform requirements, a combination of these sensors or even additional sensors may be necessary to achieve the desired level of autonomy, reliability, and performance.

As the field of autonomy continues to evolve, it’s crucial to stay updated with the latest advancements and emerging sensor technologies. By regularly assessing and reevaluating your sensor choices, integrating them seamlessly, and utilizing sensor fusion techniques, you can ensure that your autonomous platforms are equipped with the most effective and efficient sensing capabilities.

Collaborating with experts in the field of autonomy and leveraging their knowledge and experience will be invaluable in making informed decisions, optimizing the sensor configuration, and achieving the desired performance of your autonomous platforms.

With a well-selected and integrated sensor suite, including Lidar, Radar, Cameras, Ultrasonic Sensors, GPS, and IMU, your autonomous platforms will have the perception and navigation capabilities needed to navigate complex environments, avoid obstacles, and operate safely and efficiently. This will unlock a wide range of applications across industries, from autonomous vehicles to drones, robotics, and more, driving us closer to a future where autonomous systems play a central role in our everyday lives.